After the initial enthusiasm, a sense of disillusionment is gradually spreading among many developers regarding microservices architectures. Although microservices still offer significant advantages, such as faster delivery, better scalability, and greater independence from specific technologies, considerable challenges have also emerged. Unlike monolithic architectures, where all components are tightly coupled, microservices form a distributed system. This decentralisation makes it more difficult to monitor, control, and comprehensively secure individual services. Additionally, communication between microservices takes place over a network, which not only impacts speed but also potentially jeopardise the stability of the entire system.

Despite these complex challenges, microservices remain popular, indicating that their advantages often outweigh the difficulties that arise. To reduce the complexity of microservices architectures, the concept of service mesh is increasingly gaining traction.

But what exactly is a service mesh, why and when should it be used, and could it sustainably shape the future of microservices architectures? These are precisely the questions our Xperts address in our two-part blog series.

In the first part, our Xperts take a look at the challenges of microservices architectures and explore how a service mesh can help overcome them. We explain the fundamentals of this infrastructure layer and introduce the most common implementations.

Challenges of Microservices

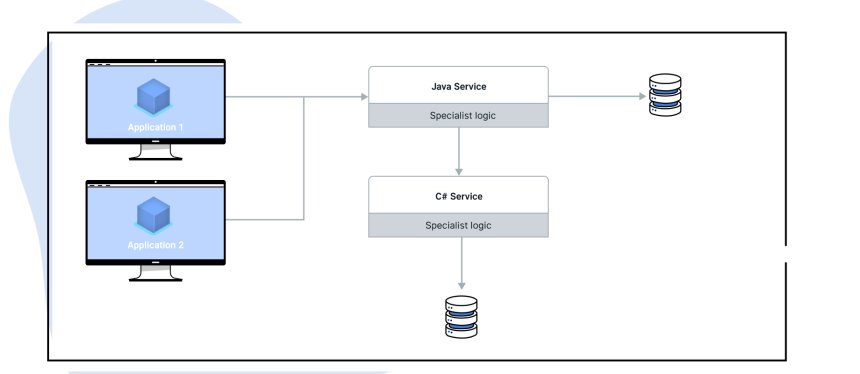

For developers, it is often obvious to address technical challenges using a consistent framework. However, a microservices framework comes with the disadvantage that it generally supports only a specific programming language. This limits the choice of technologies that can be used to implement the microservices. As a result, a key advantage of microservices – technological freedom – is lost. This freedom allows the best-suited technology to be chosen for each microservice. For example, if another programming language or technology stack is better suited to a specific problem, it can easily be used in the respective microservice. However, if the framework being used is not compatible with the new programming language or technology stack, this flexibility is significantly restricted or even impossible.

Microservices without libraries

Microservices without libraries

Even within a unified technology stack, technological freedom offers significant advantages. Every technology will eventually become outdated and thus pose a risk or become completely unusable. Microservices architectures allow for an upgrade to a new version of the technology stack or a library to be tested in a single microservice first. This not only reduces the risk but also significantly simplifies the gradual modernisation of a system. However, if the microservices framework is not compatible with the new version of the technology stack or library, the update becomes significantly more difficult or even completely blocked.

Microservices with the use of libraries

Microservices with the use of libraries

The use of different libraries in a large and complex microservices architecture can have several problematic consequences:

- Incompatibility and inconsistency: Libraries can have different versions, APIs, and configuration requirements. If various microservices are not compatible with each other or with the underlying infrastructure, this often leads to inconsistencies. Different error handling, logging methods, or security practices in the libraries can result in unpredictable system behaviour and hard-to-find bugs.

- Maintenance effort and technical debt: Maintaining and updating a wide range of libraries significantly increases the maintenance effort. Each library must be managed separately and regularly updated to close security vulnerabilities and integrate new features. This can quickly lead to technical debt, especially when libraries become outdated or are no longer maintained.

A possible solution to all these problems is the use of a service mesh.

Why Use a Service Mesh? An Introduction to Service Mesh

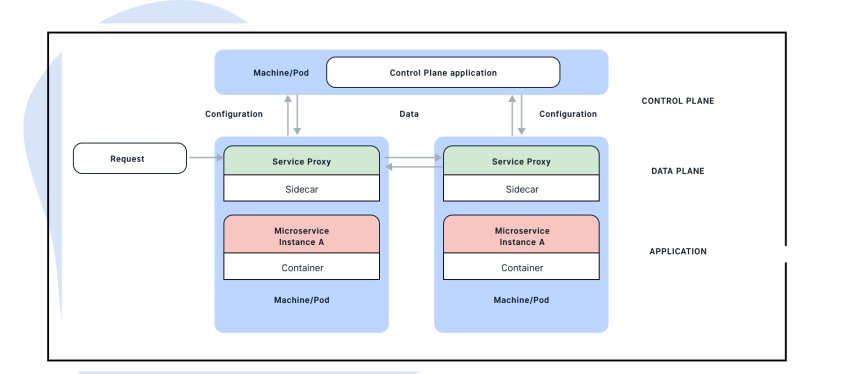

Microservices that use a service mesh can eliminate the need for many previously necessary libraries, such as those for monitoring, tracing, and circuit breaking. A service mesh shifts these and other functions from the application layer to the infrastructure.

Use of Service Mesh (Outsourcing Sidecars)

Use of Service Mesh (Outsourcing Sidecars)

It is not the first concept to follow this strategy, but the first to seamlessly integrate into the decentralised nature of a microservices architecture. While other solutions, such as API gateways, rely on central components, a service mesh assigns an additional application to each microservice instance, where functions like monitoring and circuit breaking are implemented. This additional application communicates with the microservice instance via localhost, an approach known as the "sidecar." All incoming and outgoing traffic of the microservice application is routed through this sidecar application, also referred to as a service proxy. To automatically route traffic to the service proxies, specific network configurations are used, ensuring that the microservices themselves remain unchanged.

Components of a Service Mesh (Source: Istio)

Components of a Service Mesh (Source: Istio)

Service Mesh Implementations

There are numerous service mesh implementations that differ in functionality, architecture, and supported platforms. The most well-known and widely used include:

- Istio is one of the most well-known and widely used service mesh implementations. Developed by Google, IBM, and Lyft, Istio offers a comprehensive solution for managing microservices communication. Istio uses sidecar proxies to manage communication between microservices and provides an extensive API for controlling and monitoring traffic.

- Linkerd is another popular service mesh, originally developed by Buoyant. It is known for its ease of use and simple implementation. Linkerd is distinguished by its straightforward configuration and low overhead, making it a popular choice for small to medium-sized deployments.

- Consul Connect is a service mesh solution offered as part of the Consul platform by HashiCorp. Consul Connect is well integrated into the Consul platform, which also includes service discovery and configuration management.

- AWS App Mesh is a service mesh solution offered by Amazon Web Services, specifically designed for AWS environments. AWS App Mesh is particularly useful for organisations that are heavily integrated into the AWS cloud.

- Kuma is a service mesh developed by Kong Inc. that can be used for both Kubernetes and other environments. Kuma places a strong emphasis on simplicity and scalability, offering a user-friendly configuration.

In the next part of our series, we will explore the key features of a service mesh, including monitoring, resilience, routing, and security aspects. At the same time, we will also address the challenges associated with implementation, particularly the steep learning curve, costs, increased latency, and resource requirements. Our Xperts will provide valuable insights on how to overcome these challenges.