The term serverless covers a wide range of technologies offered by the major cloud hosting providers: AWS, GCP and Azure. In this blog entry, we will concentrate on one of the major serverless technologies offered by each of the major clouds: Function as a service (FaaS). It offers a rapid path to writing code that focusses on the problem to be solved rather than infrastructural boilerplate, is by design highly scalable and produces costs according to usage.

Their inherent scalability and tight coupling of costs to usage makes FaaS a particularly useful technology for use cases where load is extremely variable such as in the IoT sector or ETL workloads. It also offers a way to create an orchestration layer in front of existing backends, thereby enabling major transformations without impacting clients.

Comparing clouds

But there are thousands of articles out there that explain what FaaS is and why you should use it. The goal of this article is to compare the offerings. Our position as a technology provider with experience in each of the major clouds through our many projects gives us a unique opportunity for such a comparison. As each cloud provider offers a slightly different technical implementation of FaaS, bringing their own advantages and drawbacks, a comparison is useful in deciding which provider to use in projects where FaaS is to be used extensively. In this article we will look at the FaaS offering of each cloud provider (Google’s Google Cloud Functions, Azure’s Azure Functions and AWS’ Lambda Functions) and compare them against each other in a number of important areas.

In the context of this evaluation, we will focus on the use case of the development of a simple API, whose job it is to take client requests, transform them and distribute them to different backend services. This use case places more weight on uptime than some other FaaS use cases such as batch processing or streaming events from IoT devices.

Execution Environment

One of the pitfalls of creating systems that need to operate at any kind of scale is to design them synchronously, ignoring the fact that most information workflows in the real world are asynchronous. Think even of a conversation: when one person is talking, the other is not doing nothing; in the best case they are listening and, in the worst, they are ignoring what is being said and deciding what to say next. The default approach should not be to create a chain of REST requests just to be able to return a response to the user immediately. This is poor design: both fragile and inflexible for future changes.

FaaS makes it easy to design and implement systems asynchronously. The thing about asynchronicity though, is that it forces the developer to think about concurrency. If the system can scale up at a blistering pace and the workflow is not necessarily coupled directly to a user requests (think a queue of events that has been building for an hour), then each part of the system needs to be built in such a way that it does not break downstream systems via DDoS.

Concurrency by horizontal scaling

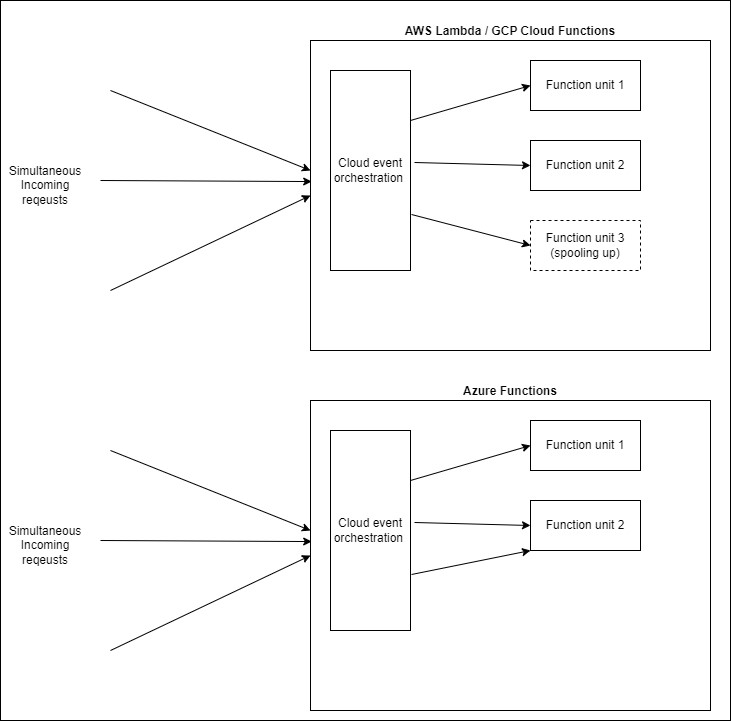

The execution environment of each unit in FaaS defines how it will handle concurrency. And the unit is a good place to start for our comparison. To leverage FaaS to its fullest, it is useful to consider each function a “nano service” in that each function provides a single functionality independently of other functions. While all three FaaS offerings enable the developer to define the upper and even lower bounds for the scaling by units (horizontal scaling), those units are on a different level of abstraction in Azure than in AWS and GCP. The configurable unit for FaaS in AWS and GCP, including its execution environment, is a single function. In the context of a REST API, this could translate to one endpoint. This makes it simple and natural to define scaling on an endpoint to endpoint basis - each function/endpoint can be configured and deployed independently of the others.

The configurable unit of Azure Functions is the “function app” – a group of functions that are deployed together and operate as one logic unit. Taking the example of the REST API again, this could mean an entire REST resource (GET, POST, PUT, DELETE instead of just GET). From our example, one disadvantage of this approach should be immediately clear – the POST endpoint cannot be scaled independently of the GET endpoint. Imagine the REST resource was /orders. In this case, the creation of new orders and the retrieval of existing ones will very likely have different scaling parameters and allowing creation at the same maximum rate as retrieval could conceivably cause problems downstream.

Of course, this problem can be addressed quite easily: by putting the order retrieval and order creation endpoints in separate function apps. But it is important to understand this concept before deciding how to design each function app.

Concurrency within a unit

In addition to concurrency via multiple function instances, Azure Functions has another way to serve multiple requests at one: concurrency within in each function itself. In order to understand what this means and why Azure offers it, a quick explanation of an important part of the FaaS lifecycle is necessary: the cold start. There is a cost associated with horizontal scaling: each time a function unit is created to serve traffic, it takes some time for it to come online. Understandably so: an entirely new execution environment – JRE, node instance etc. – is being spooled up together with the function’s code and dependencies.

While all providers allow reuse of existing units that are currently not being used, Azure Functions allows an existing function unit to serve multiple requests at once (GCP also offers this functionality with (GCP bietet diese Funktion mit Cloud Functions V2, but is not enabled by default). This makes good use of existing resources but is also a major pitfall if the function has any state at all. To illustrate this point: imagine that each function execution is tied to a so called “correlation ID”, to allow a process execution to be tracked in the logs across multiple parts of the system – an established pattern for distributed systems. This correlation ID is generated by a gateway and passed to the function via a HTTP header and the function configures its logger to include it with each log entry it generates. This works like a charm while the application is in development and does not need to scale. But as soon as the traffic becomes too great for the number of running units, Azure will reuse existing units concurrently and, if no precautions are taken, the new execution that started while the previous one was still running will override the correlation ID and both executions will receive the same one.

Again there are solutions to this: for a node execution environment async local storage for example, but as this behaviour is enabled by default for Azure Functions, it is very easy to stumble over this problem for the first time in production.

Deployment

Deployment is relatively simple for each cloud provider. Each one offers a CLI that can be used to deploy the functions directly while IaC tools such as Terraform, CloudFormation and Bicep offer a way to define the desired deployment state declaratively.

Zero downtime

The use case for this evaluation is a simple user facing API. Uptime is a critical metric for such APIs. Whereas asynchronous event processing functions can easily recover from short downtimes if the event processing infrastructure has been configured to do so, a user cannot and will not wait indefinitely. AWS Lambdas and GCP Cloud Functions come with zero downtime deployments out of the box. When a function’s code is changed or its execution environment reconfigured (e.g. node version update), requests are seamlessly transferred to the new function instance as soon as it is ready, after which the old instance is destroyed.

Azure Function’s do not do this. The function app needs to be restarted after a deployment, which causes some downtime. Utilizing a mechanism called deployment slots allows developers to avoid downtime when the deployment only includes code changes, but if the configuration of the execution environment is changed (for example when changing the runtime environment version), then the slots are recreated and thus restarted which causes downtime. For true zero downtime deployment, a more complex setup is necessary. Again, the level of abstraction of the configurable unit is the weak link here. Grouping the functions into a function app has created two significant deployment problems:

- Deployments to one of the functions in the app affect the lifecycle of all functions in the app.

- The impact of that deployment is further exacerbated by not providing a zero downtime deployment mechanism.

Local environment

Being able to test FaaS locally is an important feature as otherwise a cloud deployment would be necessary each time a change needed to be tested. This provides an interesting tool challenge for the cloud provider, as they must build an installable environment that can be installed on a local machine. The challenge here is to emulate the environment in which the functions will run once they are deployed in the cloud.

GCP offers the functions framework for this, AWS offers a CLI tool called the SAM (serverless application model) and Azure its core tools.

Finally: an advantage for grouping functions

Coming back to our simple REST API – it consists of a number of resources, each with multiple endpoints. That means we want to develop, test and deploy multiple functions (each serving one endpoint) at once. This is where the concept of an overarching “function app” is very useful. Azure Function’s core tools framework can be installed as a dev dependency and when started creates a local environment into which it deploys all of the functions defined in the function app. With one command, the developer has a local HTTP server with an endpoint for each HTTP function and an easy way to debug their code. The real power of FaaS however, is that the functions can be triggered by almost anything, not just a HTTP request. We will go into more detail on this in the next section, but examples of some other useful function triggers are events in Event Hubs or the creation of a database entry. The core tools framework also allows functions triggered in these ways to be locally debugged. That means that a message produced by an IoT device on the other side of the world can be consumed and debugged on a developer’s the local machine. It is a powerful tool.

Google’s functions framework can also be installed as a dev dependency, but it can only emulate one function at a time, limiting its usefulness for running an API locally. One option to have all functions deployed at once would be to create a custom express setup and deploy each function in it via a custom script. In this case, the cloud runtime environment is no longer being emulated, however.

AWS’ SAM CLI goes in another direction. The CLI must be installed independently of the project and uses docker to emulate the Lambda functions. When running a local API, it combines the function code with the definition in the CloudFormation (IaC) template and runs a docker container for each function in the API. This allows it to emulate the cloud environment very closely but does require a much more complex local setup that the with Azure or GCP.

Integration with other services

As previously mentioned, the real power of FaaS comes from its flexibility: functions can be triggered by almost every other service offered by the hosting cloud provider. Our use case of a simple API barely scratches the surface of what is possible.

Although a comparison of the PaaS (Platform as a Service) services offered by each cloud provider is out of the scope of this article, they play a role in evaluating the strength of the FaaS offering in each cloud.

Gateway

When building a system with publicly facing REST APIs, a gateway is an almost indispensable tool for implementing cross cutting standards and functionalities (API consistency, authentication, logging) and providing a layer of abstraction separating the API from the technology used to implement it.

Both Azure and AWS have very strong gateway offerings, both of which integrate seamlessly with their FaaS products. In fact, AWS Lambdas cannot be triggered by an external HTTP call unless an API Gateway has been configured for them. Both gateway products allow APIs to be defined with OpenAPI (swagger) definitions and offer mechanisms to define traffic throttling, IP block listing etc, in addition to JWT authentication. API endpoints are configured to call specific functions.

GCP ranks as the least mature of the three major cloud providers in this regard. It has been a few years since we last extensively explored the configuration of a gateway for a cloud function-based API. Back then, Apigee seemed to be the only option, but there was no direct integration with the Cloud Functions product. Since then, Google has upgraded its offering with a product called API Gateway, but we haven't had the opportunity to test the new integration with Google Cloud Functions yet.

Event driven

FaaS is event driven. Function executions are triggered in response to events, be it a HTTP request, an event stream, a timer, or countless other things that can be tracked by the respective cloud provider. This encourages and supports the implementation of event driven architectures and organically leads to workflows where the heavy lifting is done by non-blocking functions.

GCP offers PubSub, a gloriously simple publish/subscribe service, that allows developers to define topics on which to publish messages and subscriptions to those topics, which decide where those messages should go. Integration with Cloud Functions is not that necessary with the “Push” subscription type, as functions can be treated as simple HTTP endpoints here. The service supports message filtering so that subscribers can, through their subscription, select the messages that they want to consume, and OIDC based authentication between topic and subscriber endpoint.

In AWS, the EventBridge operates in a similar way to PubSub. Instead of topics, EventBridge uses event busses, and instead of subscriptions, developers must define rules that are evaluated each time an event arrives on the bus. A typical rule forwards that event to HTTP endpoint or another AWS PaaS service such as a Lambda.

Azure Functions' integration with the rest of the Azure ecosystem is first class. Event-based function triggers and outputs are defined as part of the source code of the functions themselves. Configuring a function to listen to an Event Hubs stream, process the messages, and then publish the results to a downstream service of a different type, such as Service Bus, is as easy as a few entries in a JSON file (if using TypeScript). This allows for the rapid creation of asynchronous workflows where each part in the workflow chain can use the technology best suited to its use case.

Conclusion

There are many other relevant topics to consider when comparing the FaaS offering of each cloud provider, such as logging and monitoring, which cannot be explained here due to space constraints. To conclude the comparison, the FaaS offering of each of the big three all provide a great way for development teams to focus on business value rather than on infrastructure, scaling and orchestration. Neither of them has the perfect amalgamation of features. GCP Cloud functions offers the quickest way to create and deploy an API using only functions, whereas Azure Functions has better tooling and a more mature set of IoT PaaS services with which to integrate. AWS Lambda is similar, but has a more complex local setup which reduces a team’s initial velocity. The use case should provide a frame in which to evaluate the impact of the stated strengths and weaknesses of each FaaS implementation.